Automated Testing to Support Shopping Rewards: How Kurashiru Improved Development Efficiency and Quality by Reducing Dependency on Human Resources

Kurashiru, inc.

For our 24th user interview, MagicPod CEO Nozomi Ito spoke with representatives from Kurashiru, Inc. about their experience using MagicPod. The discussion covered specific use cases, selection criteria, and more.

Kurashiru, inc.

Operating with the vision “BE THE SUN,” dely aims to be a positive and impactful presence in society. They run Japan’s leading recipe video platform Kurashiru, the shopping support app Kurashiru Rewards, the lifestyle media TRILL, and one of Japan’s largest live-streamer management agencies, LIVEwith.

Kurashiru Rewards is a mobile app designed to transform everyday shopping into a rewarding experience. Users earn points for common activities like traveling to stores, browsing flyers, purchasing items, and scanning receipts. These points can then be exchanged for various rewards.

KEY POINTS

- Before MagicPod, 5-6 developers spent 30 minutes to an hour performing manual testing.

- MagicPod was chosen to facilitate automation, considering the potential to involve non-engineers.

- Automated periodic and pre-release checks were realized within a month of implementing MagicPod.

- Unique test cases, such as those involving location services, were supported by MagicPod.

From the right

・Fumito Nakazawa, Development Lead for Kurashiru Rewards

・Nozomi Ito, MagicPod CEO

Mr. Nakazawa (hereafter, Nakazawa): I joined dely in 2020 as an iOS engineer, focusing primarily on Kurashiru’s iOS app. About two years later, I became involved in the launch of Kurashiru Rewards, where I now serve as the development lead.

Kurashiru Rewards operates on iOS, Android, and Web platforms, allowing users to earn points through daily activities and redeem them for benefits like gift cards. Our development team includes 40-50 members, with around 30 dedicated to Kurashiru Rewards.

Mr. Ito (hereafter, Ito): That’s even larger than the Kurashiru team!

Nakazawa: Yes, this is a high-priority project for us.

Challenges Before MagicPod

Nakazawa: Initially, we had no dedicated QA team. Developers managed test cases using spreadsheets and performed manual function checks before releases.

Nakazawa: However, as a service grows, new features are added, and the number of test items increases, leading to greater complexity and workload for manual testing. With releases occurring approximately twice a week, relying solely on human resources increases the risk of inadequate testing. Moreover, given the nature of the service, which involves handling points and retail store information, any defects that occur can have a significant impact.

Ito: Did you actually face any issues?

Nakazawa: There were no cases where issues escalated into critical problems, and thanks to phased releases, any defects that were found could be promptly addressed and resolved. However, within the development team, there was a shared reflection that some of these issues might have been preventable in advance. This led to a growing recognition of the need for proactive measures, prompting the consideration of introducing automated testing tools to address these challenges.

Why MagicPod?

Ito: Was Kurashiru using any automated testing tools before?

Nakazawa: Currently, the development team still conducts manual QA. However, Kurashiru Rewards, being a new project, provided an opportunity to try various approaches. We decided to take this chance to explore the introduction of automated testing tools.

We specifically considered the following three options:

- Platform-specific UI testing frameworks (XCTest for iOS, Espresso for Android)

- Maestro, which enables test execution via YAML-based configurations

- GUI-based automated testing tools like MagicPod

For platform-specific UI testing frameworks, individual implementation would be required for iOS and Android, which would involve additional communication costs for coordinating test cases. Due to concerns about the implementation and maintenance workload, we decided not to pursue this option.

Maestro was also considered, but its reliance on YAML-based test case creation raised concerns about a high dependency on engineers. While we initially envisioned engineers managing the tests, we anticipated challenges with YAML-based operations if a future QA team with non-engineers were to take over. Thus, we opted not to go with this approach.

Ultimately, we chose MagicPod because its GUI-based interface allows for intuitive test case creation, making it accessible for both engineers and non-engineers alike. This advantage made it the most suitable choice for our needs.

Ito: Thank you! Before making your final decision, I assume you conducted a trial. What specific evaluation points were crucial during that process?

Nakazawa: There were two main points we evaluated.

The first was functionality. Kurashiru Rewards includes features such as location tracking and healthcare capabilities that measure steps. For location tracking, specifically, we have a feature where a gauge fills based on the distance traveled. MagicPod's ability to simulate movement by setting latitude and longitude coordinates was particularly appealing.

Ito: Were you physically moving to test this before?

Nakazawa: That’s correct! (laughs) When the feature was first released, we distributed the development version to team members who physically moved around to check the travel distance using a debug menu. Automating this process was a huge help.

The second was the quality of customer support during the trial period. The development team received prompt and accurate responses to technical questions and issues. This significantly reduced our concerns about post-adoption operations and support.

How MagicPod is Utilized

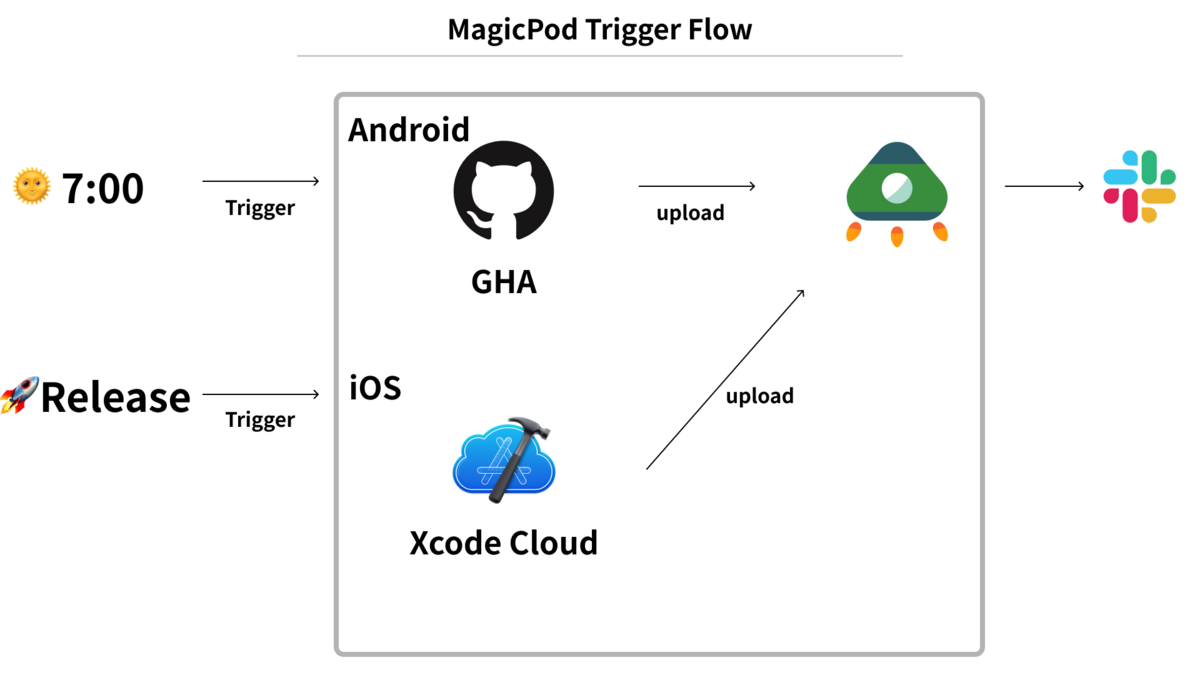

Nakazawa: Currently, we have defined the core functions of the service and established a system to detect any regressions in those functions. Specifically, we run tests at the following two timings:

- A scheduled execution every morning at 7:00 AM

- Whenever a release branch is created

Nakazawa: Previously, a team of 5–6 developers would spend 30 minutes to an hour using a spreadsheet to manually check a list of critical functions. This process has now been streamlined, saving significant time. Moreover, manual testing often led to a decline in attention to detail, which introduced inconsistencies in test quality. Automating these tests has been a major improvement.

When MagicPod was first implemented, there were 23 test cases for both iOS and Android. This number has since increased to around 25–26. We allow team members to independently decide when to add new test cases, typically during the development of new features as deemed necessary. While we plan to continue expanding our test cases, we are currently evaluating the optimal extent of this expansion as development progresses.

Ito: How are testing operations structured?

Nakazawa: We considered creating a dedicated QA team or assigning the task to app engineers but ultimately decided that I would manage the operation alone. This decision was based on the idea that having one person familiar with both iOS and Android specifications ensures easier detection of discrepancies between the two platforms.

In addition, without a dedicated QA team, managing testing across multiple people could result in high communication costs and the risk of test cases becoming outdated or neglected. In practice, once I got used to creating and managing test cases, it didn’t require much additional effort.

Currently, we have transitioned the responsibility to the iOS and Android engineers, establishing a system for collaborative operation. We’ve issued five accounts each for iOS and Android engineers, and everyone has a basic understanding of how to use MagicPod. For instance, when an issue arises during feature development, the responsible engineer might add preventive test cases. Similarly, if a test case fails, the respective feature owner reviews and addresses the issue.

Ito: It sounds like you are planning to eventually establish a QA team.

Nakazawa: Exactly. While one of the reasons we chose MagicPod was its accessibility for non-engineers, our current focus is on managing as much as we can internally. When the organization or the service scales to a point where a dedicated QA team becomes essential, we’ll revisit and refine the structure.

Efforts and Challenges to Achieve Stable Operations

Ito: How long did it take to achieve stable operations after the introduction of MagicPod?

Nakazawa: It took about a month. Initially, there were parts where we weren't familiar with how to use the tool, so it was a continuous trial-and-error process. By reviewing the results of the daily scheduled runs every morning, making corrections if there were issues, and checking again the next day, we gradually figured out how to operate it efficiently, leading to stabilization.

Ito: Are there still any cases where errors are reported in the test results, but there’s actually no problem…like false positives or flaky tests?

Nakazawa: Yes, especially in parts that depend on API specifications. For example, in cases where the API response differs depending on the time of day, instability can occur. After some team discussions, we decided to exclude such parts with strong external dependencies from the test targets for now.

Ito: How has the internal response been since the introduction, and what challenges have emerged?

Nakazawa: The internal response has been mostly positive. In particular, with the automation of test cases for core functions, we've heard many comments like, "We now have an environment where we can focus on development with peace of mind." Also, since MagicPod supports web testing, we shared this information with the web team, who were still doing manual testing. However, at this stage, no other teams have adopted it.

One challenge is maintaining test cases due to UI changes. If changes occur and we can't respond quickly, we end up having to fix everything in bulk later, which leads to significant effort. This recently happened, so the team has been reminded of the importance of continuous test case maintenance.

Ito: Are you using any CI tools?

Nakazawa: Yes. For Android, we use GitHub Actions, while for iOS, we use Xcode Cloud to generate the build and send it to MagicPod. Every morning, the app is built, and then tests are automatically executed.

Final Thoughts

Nakazawa: I strongly recommend starting with a free trial to evaluate how the tool works in your actual business environment. It’s important to test whether the specific features of your service can be tested during the trial and to solidify a concrete image of how it would be used in practice.

Even for products with rapid development cycles, like Kurashiru Rewards, effective use can be achieved with proper operational planning. However, in the initial stages of implementation, there may be some instability, and continuous adjustments are necessary to improve the accuracy of test cases.

By overcoming these challenges, it’s possible to enhance developers' psychological safety and establish an efficient test operation system. Test automation isn’t something that can be completed overnight, but from a long-term perspective, it can significantly contribute to improving the quality of the development process.

Kurashiru, inc.

- Tech blog (Japanese):